This app streams events from PostHog into BigQuery as they are ingested.

Installation

The BigQuery Export app requires either PostHog Cloud, or a self-hosted PostHog instance running version 1.30.0 or later.

Not running 1.30.0? Find out how to update your self-hosted PostHog deployment!

Enabling the app

- Visit the 'Apps' page from PostHog.

- Search for 'BigQuery' and select the 'BigQuery Export' app.

- Click on the blue settings icon and follow the configuration steps:

- Upload your Google Cloud key

.jsonfile. (See below for instructions on how to retrieve this.) - Enter your Dataset ID

- Enter your Table ID

- Upload your Google Cloud key

- Watch events roll into BigQuery

Setting up BigQuery access

To set the right permissions up for the BigQuery plugin, you'll need:

- A service account.

- A dataset which has permissions allowing the service account to access it.

Here's how to set these up so that the app has access only to the table it needs:

Create a service account. Keep hold of the JSON file at the end of these steps for setting up the app, and remember the name too.

Create a role which has only the specific permissions the PostHog BigQuery app requires (listed below), or use the built in

BigQuery DataOwnerpermission. If you create a custom role, you will need:bigquery.datasets.getbigquery.tables.createbigquery.tables.getbigquery.tables.listbigquery.tables.updateData

Create a dataset within a BigQuery project (ours is called

posthog, but any name will do).Follow the instructions on granting access to a dataset in BigQuery to ensure your new service account has been granted either the role you created or the "BigQuery Data Owner" permission.

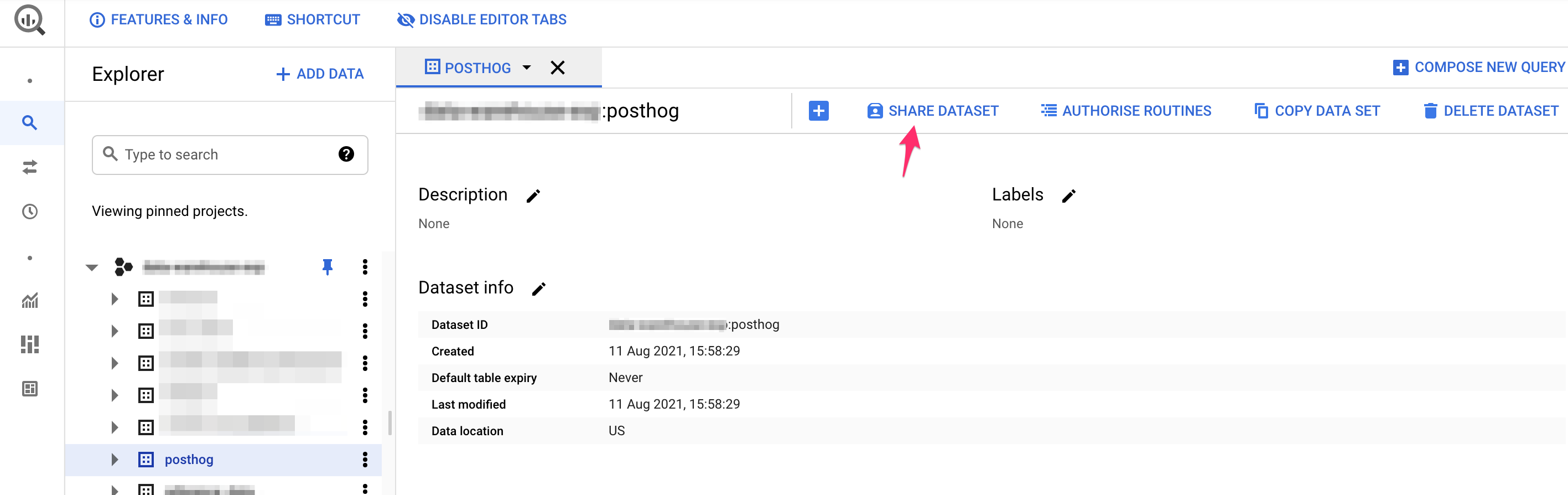

Use the Share Dataset button to share your dataset with your new service account and either the BigQuery DataOwner role, or your custom role created above. In the below, we've used a custom role PostHog Ingest.

That's it! Once you've done the steps above, your data should start flowing from PostHog to BigQuery.

Event schema

Here is a summary of all the fields that are exported to BigQuery.

| Field | Type | Description |

|---|---|---|

| uuid | STRING | The unique ID of the event within PostHog |

| event | STRING | The name of the event that was sent |

| properties | STRING | A JSON object with all the properties sent along with an event |

| elements | STRING | Elements surrounding an autocaptured event |

| set | STRING | A JSON object with any person properties sent with the $set field |

| set_once | STRING | A JSON object with any person properties sent with the $set_once field |

| distinct_id | STRING | The distinct_id of the user who sent the event |

| team_id | STRING | The team_id for the event |

| ip | STRING | The IP address that was sent with the event |

| site_url | STRING | This is always set as an empty string for backwards compatibility |

| timestamp | TIMESTAMP | The timestamp when the event was ingested into PostHog |

| bq_ingested_timestamp | TIMESTAMP | The timestamp when the event was sent to BigQuery |

Configuration

| Option | Description |

|---|---|

JSON file with your google cloud keyType: attachment Required: True | |

Dataset IDType: string Required: True | In case Google Cloud tells you "my-project-123245:Something", use "Something" as the ID. |

Table IDType: string Required: True | A table will be created if it does not exist. |

Events to ignoreType: string Required: False | Comma separated list of events to ignore |

Maximum upload size in bytesType: string Required: False | Default 1MB. Upload events after buffering this many of them. The value must be between 1 MB and 10 MB. |

Export events at least every X secondsType: string Required: False | Default 30 seconds. If there are events to upload and this many seconds has passed since the last upload, then upload the queued events. The value must be between 1 and 600 seconds. |

Export the property $elements on events that aren't called `$autocapture`?Type: choice Required: False | Advanced |

Troubleshooting

What should I do if events aren't showing up?

The best way to debug events not showing up is by viewing the logs, which can be accessed by clicking the 'Logs' icon just to the left of the blue settings button.

This will bring up a new panel with a list of all the most recent logs from our app.

Take a look back through the log and see if there are any ERROR messages that can help provide more information on why the export is failing.

Tip: You can filter down and only view

ERRORorWARNmessages using the toggles at the top of the panel next to 'Show logs of type'

Why am I seeing duplicate PostHog events in BigQuery?

There's a very rare case when duplicate events appear in BigQuery. This happens due to network errors, where the export seems to have failed, yet it actually reaches BigQuery.

While this shouldn't happen, if you find duplicate events in BigQuery, follow these Google Cloud docs to manually remove them.

Here is an example query based on the Google Cloud docs that would remove duplicates:

Further information

Who created this app?

We'd like to thank PostHog team members Yakko Majuri, Marius Andra, Neil Kakkar, Michael Matloka and community member Edward Hartwell Goose for creating this BigQuery Export app.

Who maintains this app?

This app is maintained by PostHog. If you have issues with the app not functioning as intended, please raise a bug report to let us know!

What if I have feedback on this app?

We love feature requests and feedback! Please create an issue to tell us what you think.

What if my question isn't answered above?

We love answering questions. Ask us anything via our Support page.

You can also join the PostHog Community Slack group to collaborate with others and get advice on developing your own PostHog apps.